Reasoning Models and National Security

Finally we have LLMs that "think" before they speak

It’s no secret that large language models (LLMs) today are far from perfect. Most LLMs lack logical reasoning capabilities and therefore have difficulty answering questions that are simple and intuitive for humans. For instance, until recently, most LLMs incorrectly answered the question: “Which number is bigger – 9.11 or 9.9?” In order to use LLMs for mission critical national security tasks like battlefield management and intelligence analysis, LLMs’ logical reasoning abilities must improve.

Fortunately, recent advances in reasoning models from OpenAI, Google, Meta, and others indicate that cutting-edge LLMs are now capable of more human-like “thinking” and “reasoning.” These breakthroughs translate into markedly improved performance on national security tasks that rely on logical reasoning, representing a step-change in core areas such as math, science, and engineering. As a result, reasoning models are moving closer towards being able to deploy in real-world national security production environments, thanks to their enhanced accuracy and reasoning capabilities.

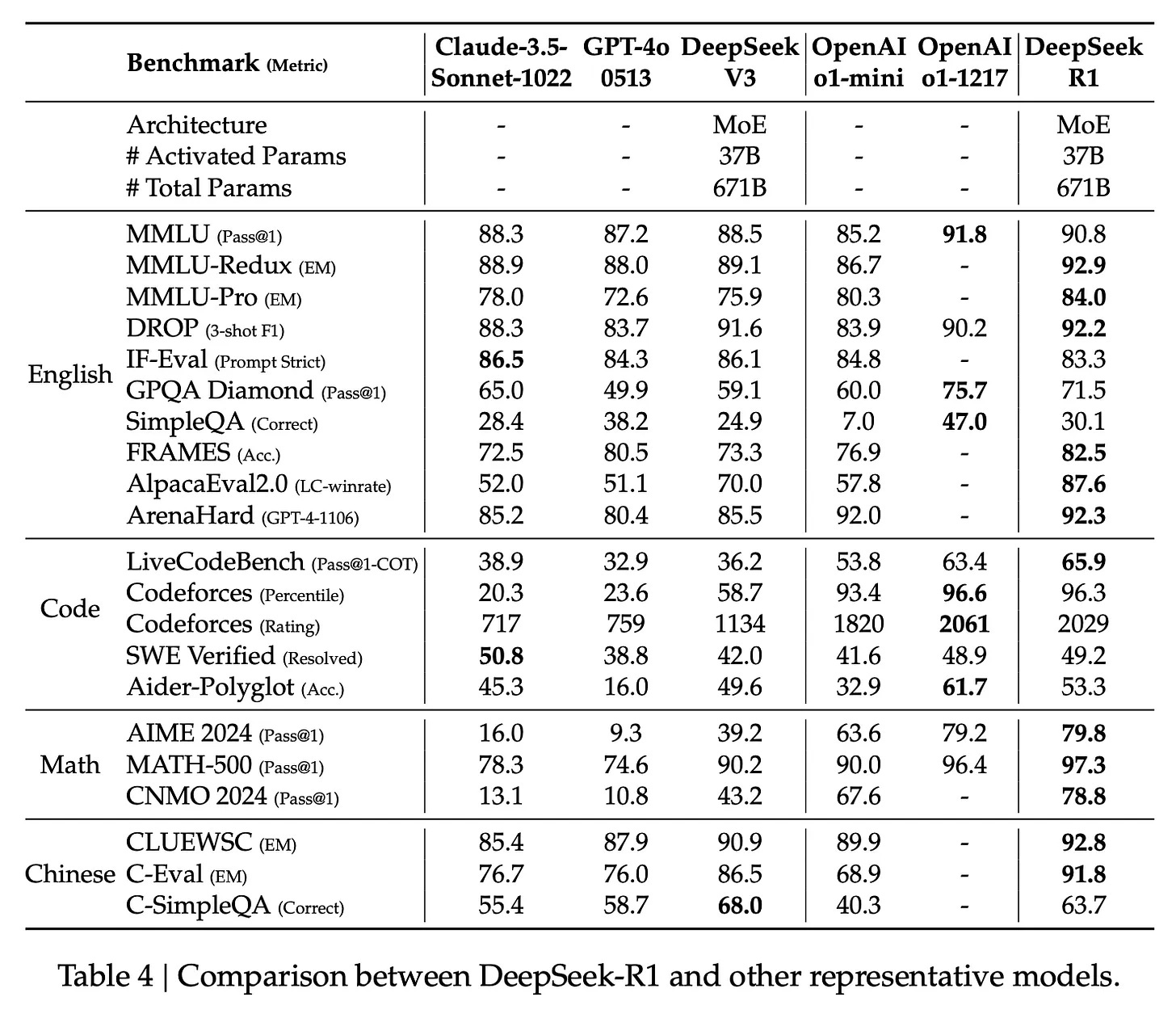

In September 2024, OpenAI released its o1 model demonstrating the power of “reasoning models” and inference scaling laws. Just a few months later, Google introduced its own reasoning model, Gemini 2.0 Flash Thinking, followed by OpenAI’s release of o3, an even more advanced and powerful reasoning model than o1. Earlier this week, on January 20, Chinese startup DeepSeek released an open source reasoning model R1 that matches the performance of o1 – R1 is currently the world’s leading open source reasoning model and, notably, costs significantly less (~96% less) than o1.1 Unlike OpenAI, which did not release any technical details about how they trained o1 and o3, DeepSeek published an excellent paper detailing the training methodology behind R1. Meanwhile, Meta’s AI research lab, FAIR, recently released research indicating that Meta is also actively developing models with reasoning capabilities.

Rather than improving model performance with human feedback and ever larger pre-training data sets (an approach some experts worry may soon hit a limit due to the potential scarcity of high-quality training data), reasoning models improve performance during post-training and inference through a mix of fine tuning on “reasoning datasets” (ex: code competition problems, puzzles, etc, that include chain of thought reasoning steps), pure reinforcement learning (in which the model learns unsupervised by receiving a “reward” every time it uses proper reasoning to answer a logical question correctly), and increasing the amount of “test time compute” used during inference.2 In fact, researchers have found that scaling LLMs’ “test time compute” during inference can improve performance more effectively than increasing the number of model parameters or the size of its pre-training dataset.

Traditionally, inference, the process of running a pre-trained model on new inputs to generate a response, is not particularly compute heavy. However, reasoning models use significantly more compute during inference at test time to generate long internal chains of thought (breaking down problems into sub-problems, checking the accuracy of their work, comparing multiple potential responses to the same prompt, etc) before responding to the user – “thinking” before they answer like humans do.

Reasoning models like o1 and o3 have proven to dramatically outperform traditional LLMs on tasks that require logic like math, coding, and science problems. Notably, o3 also performed extremely well on the ARC-AGI benchmark, a benchmark designed to measure models’ progress towards “artificial general intelligence” (AGI), particularly when given ample test time compute to complete each task in the benchmark. Increasing test time compute is costly – a single o3 query can cost more than $1000. However, experts expect the price of reasoning models to drop as models are refined and optimized (already DeepSeek’s R1 model is available for a fraction of the price of o1).

Reasoning models do not outperform traditional LLMs like GPT-4o in all tasks. GPT-4o is still better at completing tasks like writing and text editing. Additionally, traditional LLMs are better suited to time sensitive tasks, as they run much more quickly than reasoning models which require substantial compute time at inference.

So what does the emergence of reasoning models mean for national security?

Many tasks critical to national security require careful logical reasoning. Reasoning models like o3 perform extremely well on benchmarks that measure proficiency in software engineering, competition code, competition math, PhD-level science, and frontier math (often outperforming human experts). o3’s performance on Codeforces, a coding competition, is equivalent to the 175th best competitive human coder on the planet. Similarly, o3 scored 87.7% on GPQA diamond, a set of graduate-level biology, physics, and chemistry questions – in contrast, most human PhDs typically attain only 70% in their relevant field.

As I’ve written about in the past, improved AI code generation will be incredibly impactful on national security, as codegen models are able to help with tasks like codebase modernization, automated cybersecurity vulnerability remediation, sensor fusion, and edge code deployment. In the future, reasoning models will harness their coding capabilities to enhance intelligence analysis by integrating data from diverse sources – such as human intelligence, open-source data, satellite imagery, and social media – and reasoning about it to generate scripts, queries, and visualizations that swiftly uncover insights. This automation will save analysts significant time by streamlining data science tasks, allowing them to focus on higher-level strategic decisions.

Reasoning models have also proven particularly adept at cybersecurity tasks. In December 2024 the US and UK AI Safety Institutes (US AISI and UK AISI respectively) released a technical report evaluating o1’s cybersecurity, biological, and software development capabilities. “US AISI evaluated o1 against a suite of 40 publicly available cybersecurity challenges. The model was able to successfully solve 45% of all tasks, compared to a 35% solve rate for the best-performing reference model evaluated. The o1 model solved every challenge solved by any other reference model and solved an additional three cryptography-related challenges that no other model completed.” In the future, reasoning models may help secure a wide variety of digital assets. Reasoning models could act as continuous, automated penetration testers – reading source code, identifying bugs, and then generating secure code to patch those bugs. They could also survey an organization’s website and digital infrastructure and write exploit scripts that find vulnerabilities in its systems. In the wake of a cyber attack, reasoning models could assist in digital forensics by investigating how the attack occurred and identifying those responsible. They may also be able to reverse engineer code binaries, helping to identify, analyze, and stop the spread of malware. Further, reasoning models will aid US and allied offensive cyber operations. Already, state sponsored hackers from China, Iran, North Korea, and Russia are experimenting with using OpenAI’s models to improve offensive operations – US and allied offensive cyber operators must do the same.

Models capable of accelerating scientific discovery will also undoubtedly impact national security. Imagine if all projects within the Department of Defense’s (DoD) research labs (e.g., DARPA, AFRL, etc.) were autonomously carried out by reasoning models – or, at the very least, if those projects were completed twice as quickly by humans augmented with reasoning models. Humans augmented by PhD-level reasoning models could see breakthroughs in material science, quantum computing, battery science, nuclear energy generation, autonomous systems development, and much more. The UK and US AISI report showed that reasoning models are able to understand and perform wet lab tasks – a capability that could dramatically impact how we respond to future pandemics or biological warfare, from understanding emerging threats to swiftly developing vaccines or cures. Startups like Sakana AI are actively developing AI models (some of which will likely be reasoning models) that are able to conduct scientific research. Further, imagine if a reasoning model was given a set scientific task (ex: design a quantum computer, design a nuclear fusion reactor with net energy gain, etc) and then left to run for days, weeks, months, or years. Who knows what kind of scientific breakthroughs a reasoning model could achieve if its test time compute was massively increased?

In a similar vein, as reasoning models improve their math and science skills, their engineering skills will improve as well. Although mechanical engineers remain split on the promise of AI-generated CAD designs, the continued advancement of reasoning models is set to automate and optimize a host of engineering tasks that require strong logical reasoning. Although humans will still lead in complex and high-level design, reasoning models can assist by generating simpler parts and producing routine diagrams (such as work instructions and 2D engineering drawings derived from more advanced 3D designs) that do not rely heavily on creativity or taste. Reasoning models may also be able to work with simulators in the loop to optimize hardware designs for manufacturability, cost, and performance. In addition, reasoning models could help accelerate the design and testing of semiconductors and other electronics like PCBs (note that all major U.S. defense systems and platforms rely on semiconductors and other microelectronics).3 Startups like Diode Computers, Silogy, Silimate, Cady, MooresLab, and Voltai are all exploring how agentic AI can be used to help automate design and testing of semiconductors, chips, and PCBs – while I don’t know the exact models used by these startups, I imagine many will leverage reasoning models going forward. Through automated optimization and generative design, AI-driven systems can reduce design and manufacturing times, leading to faster deployment of new weapons, autonomous systems, platforms, and more.

Multimodal AI reasoning models will be able to assist with another key task relating to physical systems: maintenance. Once physical systems are deployed in the field, they require maintenance and support to keep them operational. Today the DoD spends billions of dollars a year on maintenance, repair, and overhaul (MRO) of physical systems. In fact, “sustainment” costs like repair parts or maintenance personnel account for about 70% of a weapon system's total cost. The DoD has experimented with AI to reduce maintenance costs for years using technologies like predictive maintenance (although notably the DoD has not fully implemented predictive maintenance on any of its weapon systems despite the technology’s ability to save significant time and money). However, multimodal reasoning models in particular show promise to drive down MRO costs and system downtime.

Rather than relying on a trained expert or manually searching through hundreds of pages in a system’s manual, a warfighter could simply upload a photo or video of a broken system to a reasoning model capable of interpreting math, science, and engineering concepts, and receive step-by-step repair instructions without any specialized human expertise. Perhaps in the future these reasoning models could even be deployed on robotic systems that could then undertake the maintenance themselves. The DoD has explored the use of robotics for MRO in the past, but has not fielded these systems at scale.

Going forward reasoning models may even be able to support tasks like battlefield management, war gaming, and battle planning, which all require strong logical reasoning skills. The DoD’s Chief Digital and AI Office (CDAO) recently announced that INDOPACOM4 and the Navy are actively exploring ways LLMs can help commanders make battlefield decisions more quickly against high-tech adversaries. While CDAO did not announce which kinds of models this experimentation will use, it is likely INDOPACOM will look to leverage reasoning models for these kinds of logic-heavy tasks. The USAF is also experimenting with using LLMs (potentially reasoning models) for war gaming.

Similarly, reasoning models may be able to improve DoD logistics management, a crucial, yet under appreciated part of battle planning and management. Experts believe the DoD is unprepared to deter or fight a war in the Indo-Pacific, in large part because of the challenges of contested logistics in the region.5 Reasoning models may be able to play a part in improving the DoD’s logistics management by assisting logistics officers with tasks like route planning, inventory management, and demand forecasting. Additionally, they could help identify alternate suppliers and supply routes in the event of a major supply chain disruption.

Of course, reasoning models are still imperfect with the potential to hallucinate. I’m not suggesting that they be integrated into mission critical applications without a human in the loop any time soon. However, the DoD should begin exploring how these models can be effectively used today and prepare to continue to increase their usage in the future as the technology continues to improve. As I’ve written about in the past, there are still real technical hurdles to developing and deploying these kinds of AI models on DoD and intelligence community (IC) systems. Companies building applications that leverage reasoning models for national security use cases will need to do significant work to get security certifications and accreditations before deploying on DoD and IC networks. Additionally, the DoD needs to continue building up the amount of AI compute hardware available on classified networks. As reasoning models become more important and widely used, DoD IT leaders may also consider acquiring compute hardware that is particularly good at inference from companies like Groq.

In a future conflict with a peer adversary, it is likely that the US and its allies will face an adversary who is similarly equipped with reasoning models adept at math, science, and engineering tasks. It is essential that the US and allied militaries understand the power of these models – both in terms of how adversaries might leverage them to threaten US and allied national security and how they can be employed to strengthen and safeguard it. One of the US’s key advantages is its strong alliances. The DoD and American AI researchers should look for ways to collaborate with allied nations (ex: by sharing training datasets, benchmarks, and cutting edge model training and optimization techniques) in order to collectively push forward that state of the art of reasoning models.

Chinese AI researchers are actively developing cutting-edge reasoning models. DeepSeek’s R1 model is currently the most advanced open source reasoning model available. Similarly Moonshot AI, another Chinese startup backed by Alibaba and Tencent, launched its own reasoning model, which Moonshot claims outperforms o1 on several mathematical benchmarks including China's high school entrance examination, college entrance examination, postgraduate entrance examination and math with introductory competition problems.

China will not be the only American adversary to train its own reasoning models. Despite US sanctions severely restricting Chinese researchers’ access to cutting-edge GPUs, Chinese researchers proved that it is possible to train highly performant reasoning models with limited compute and financial resources. Chinese model developers have developed techniques to improve training efficiency on lower end chips. Supposedly, DeepSeek’s V3 model (which R1 was based on) only cost $5M to pre-train – much less than other reasoning models (although some experts believe that this price tag may be misleading). As one Bloomberg piece points out, it is not hard to imagine countries like Russia and Iran training their own high quality models, as the price to train LLMs has greatly decreased. As evidenced by R1, US sanctions appear to have accelerated the development of effective AI models that do not rely on high end chips, greatly lowering the barrier to entry for adversaries to train their own AI models. Even if US adversaries do not train their own reasoning models from scratch, they will have access to open source reasoning models like R1 that they can fine tune and integrate into their own military systems.

It is likely that the DoD will begin exploring the potential of reasoning models soon. In recent months, reasoning model developers OpenAI and Anthropic both announced new efforts to sell into the DoD, partnering with the likes of Anduril and Palantir, respectively. Models from both OpenAI and Anthropic are now available on top secret DoD networks. Additionally, the DoD’s Chief Digital and AI Office (CDAO) launched its new AI Rapid Capabilities Cell (AI RCC) in partnership with the Defense Innovation Unit (DIU) to accelerate and scale the deployment of cutting-edge AI in the DoD – some of this funding will likely go to projects leveraging reasoning models.

Today, US companies are at the forefront of this technology, but foreign adversaries’ capabilities are catching up. Advances in reasoning models will unlock a whole host of automated capabilities that will strengthen US national security by improving our cybersecurity defenses, pushing forward cutting edge scientific research, speeding up and improving the design of hardware and software systems, optimizing logistics and battlefield management, and likely much more that we can’t imagine today. The DoD’s new AI initiatives must prioritize the driving real, tangible end user value with AI technologies like reasoning models to ensure the United States maintains its technological edge and strengthens its ability to counter emerging threats in an increasingly competitive global landscape.

As always, please let me know your thoughts! I know that this technology is rapidly changing. And please reach out if you or anybody you know is building a startup at the intersection of national security and technology.

R1 costs $0.55 per million input tokens and $2.19 per million output tokens while o1 costs $15 per million input tokens and $60 per million output tokens

There is a lot of technical depth here that I don’t want to get into in this blog post. For those interested in a good technical breakdown of how developing reasoning models differs from other LLMs, I recommend Ben Thompson’s and Nathan Lambert’s recent posts on DeepSeek’s R1 here.

For more on the importance of semiconductors and other electronics, see CSIS’s “Semiconductors and National Defense: What Are the Stakes?”

For those interested in a deep dive on the challenges of contested logistics in a conflict in the Indo-Pacific region, I highly recommend this essay by Zachary Hughes on the subject.

Note: The opinions and views expressed in this article are solely my own and do not reflect the views, policies, or position of my employer or any other organization or individual with which I am affiliated.

Fantastic article, Maggie!

Looking forward to seeing how general reasoning vs specialization evolve. Will we fine tun general reasoning models on specific types of intelligence the same way vision models will start with imagenet backbones.... especially seeing more and more RL at the planning level, is meta learning going to be the next wave